After two years of on-and-off hype from CEO Sam Altman, many were expecting GPT-5 to be a clear step toward AGI. Instead, what OpenAI delivered is less of a sci-fi leap and more of a substantial UX overhaul (albeit a very good one) by uniting all their previous models under a single flagship: GPT-5.

The context window, however, remains surprisingly limited: 8K tokens for free users, 32K for Plus, and 128K for Pro. To put that into perspective, if you upload just two PDF articles roughly the size of this one, you’ve already maxed out the free-tier context.

Even so, ChatGPT likely remains the most useful, accessible AI tool for the vast majority of people. Most everyday use cases simply don’t require a million-token memory, and—full disclosure—it’s still my personal go-to, with the occasional assist from Gemini 2.5 for heavy-context work.

In this article, I’ll give you an honest breakdown of what GPT-5 actually offers—both the good and the bad. I’ll walk through what’s new, put the model to the test, and see how it really stacks up in practice.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is GPT-5?

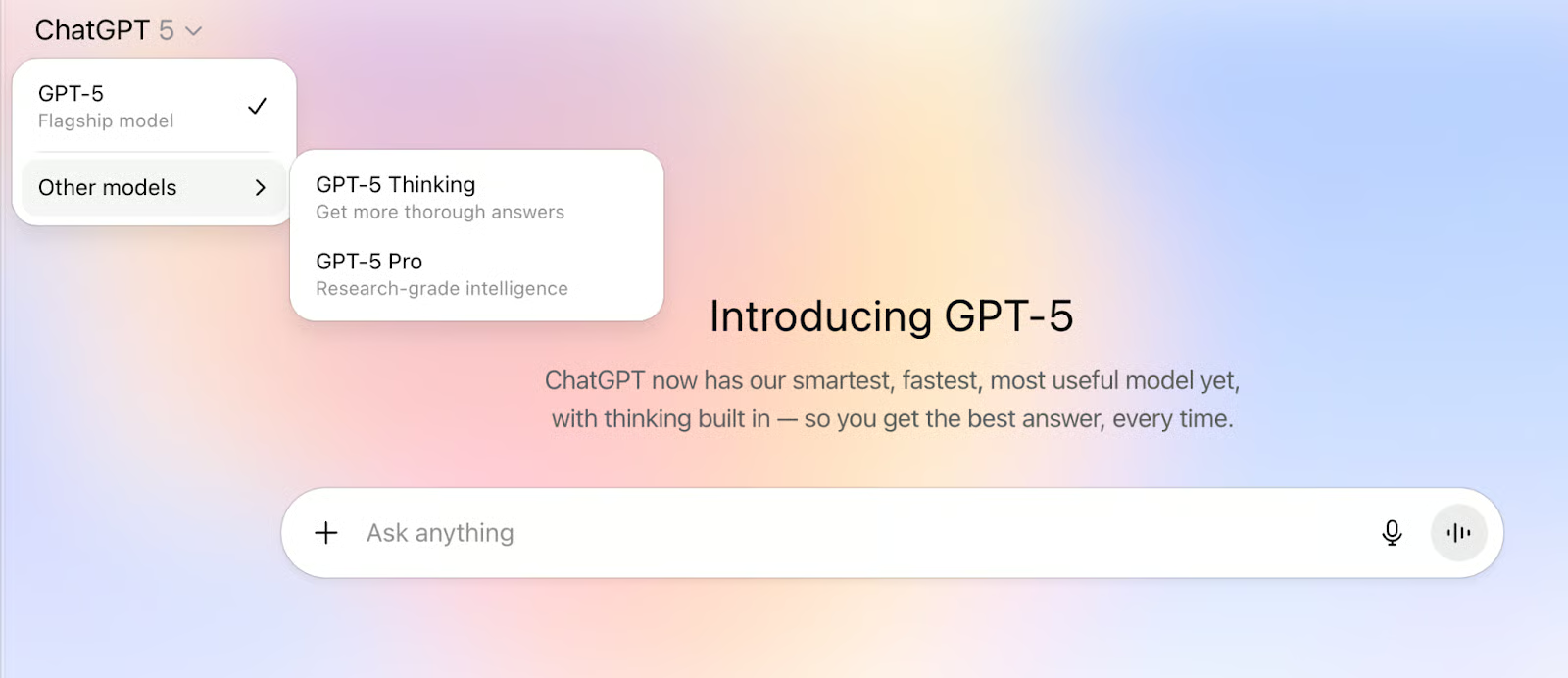

GPT-5 is OpenAI’s new flagship model, and it completely replaces the mix of GPT-4-era systems. If you’ve been used to seeing options like GPT-4o, GPT-4o-mini, or o3 in the model picker, those are gone. You no longer have to decide which one to use for speed or quality—the system now does that automatically.

When you type a prompt, GPT-5’s router decides in real time whether to give you a fast response or engage in deeper, slower reasoning. The goal is to make the experience seamless: one model name, consistent behavior, and no manual switching.

As you can see in the image above, you can still manually select GPT-5 Thinking if you want the model to take extra time and deliver more thorough, step-by-step answers, or GPT-5 Pro if you need maximum reasoning depth and accuracy for research-grade tasks. The difference is that these are now variations of the same core model.

Here’s how the new family lines up against the previous generation:

| Previous model | GPT-5 model |

|---|---|

| GPT-4o | gpt-5-main |

| GPT-4o-mini | gpt-5-main-mini |

| OpenAI o3 | gpt-5-thinking |

| OpenAI o4-mini | gpt-5-thinking-mini |

| GPT-4.1-nano | gpt-5-thinking-nano |

| OpenAI o3 Pro | gpt-5-thinking-pro |

What you get depending on your tier

The free version gives you access to the main GPT-5 model as well as GPT-5 Thinking, but with the smallest context window and tighter usage limits. It’s fine for everyday chatting, short drafting, or answering questions, but you’ll quickly hit the ceiling if you try to work with longer documents.

| Plan | Context Window |

|---|---|

| Free | 8K tokens |

| Plus | 32K tokens |

| Pro | 128K tokens |

| Team | 32K tokens |

| Enterprise | 128K tokens |

Plus subscribers get the same models but with expanded usage and a bigger 32K token context window—enough to handle medium-sized PDFs or a more sustained back-and-forth before things fall out of memory. Response times are also noticeably faster here compared to the free tier, which is throttled based on availability.

Pro is where things open up. You get GPT-5, GPT-5 Thinking, and GPT-5 Pro—the high-end variant designed for maximum reasoning depth and accuracy. The context window jumps to 128K tokens, which is large enough for book-chapter-level work or multiple long files in a single session.

Team and Enterprise plans are essentially custom arrangements, but they include all variants, flexible usage, and the fastest response times available. Enterprise users also get the 128K context window, while Team sticks to 32K.

New Features in GPT-5

Chat-based features

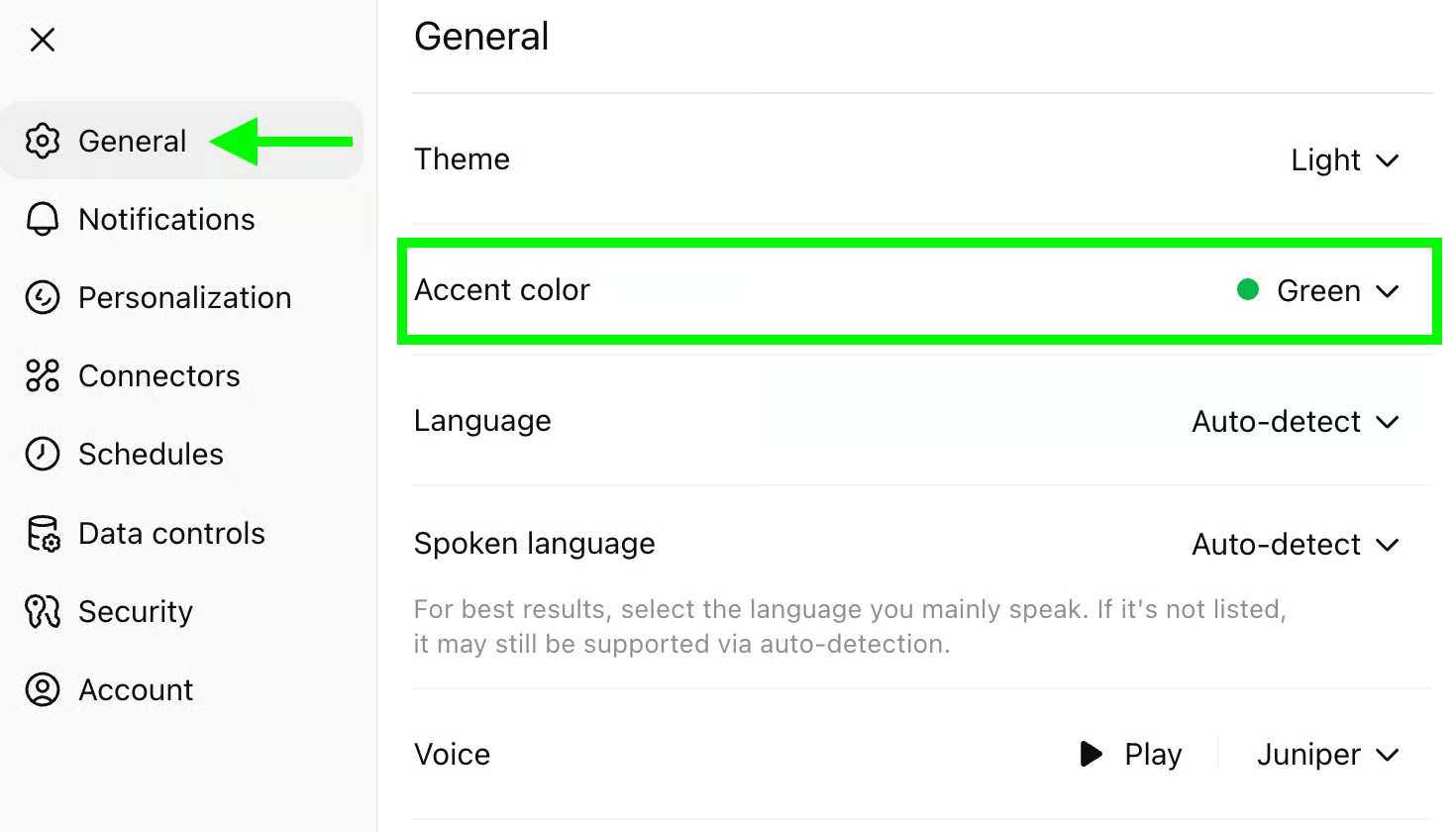

Customize the color of your chats

You can now pick the color scheme for your chats. It’s purely cosmetic, but it helps make the interface more like your own environment. You can change the color from the General section in Settings:

Change personalities

GPT-5 introduces preset personalities, allowing you to switch the assistant’s style to be more supportive, concise and professional, or even lightly sarcastic. Because GPT-5’s steerability has improved, these styles stick throughout the conversation instead of fading after a few replies.

To access this feature, go to the Personalization section in Settings, click Custom instructions, and then select the personality you want by picking a preset:

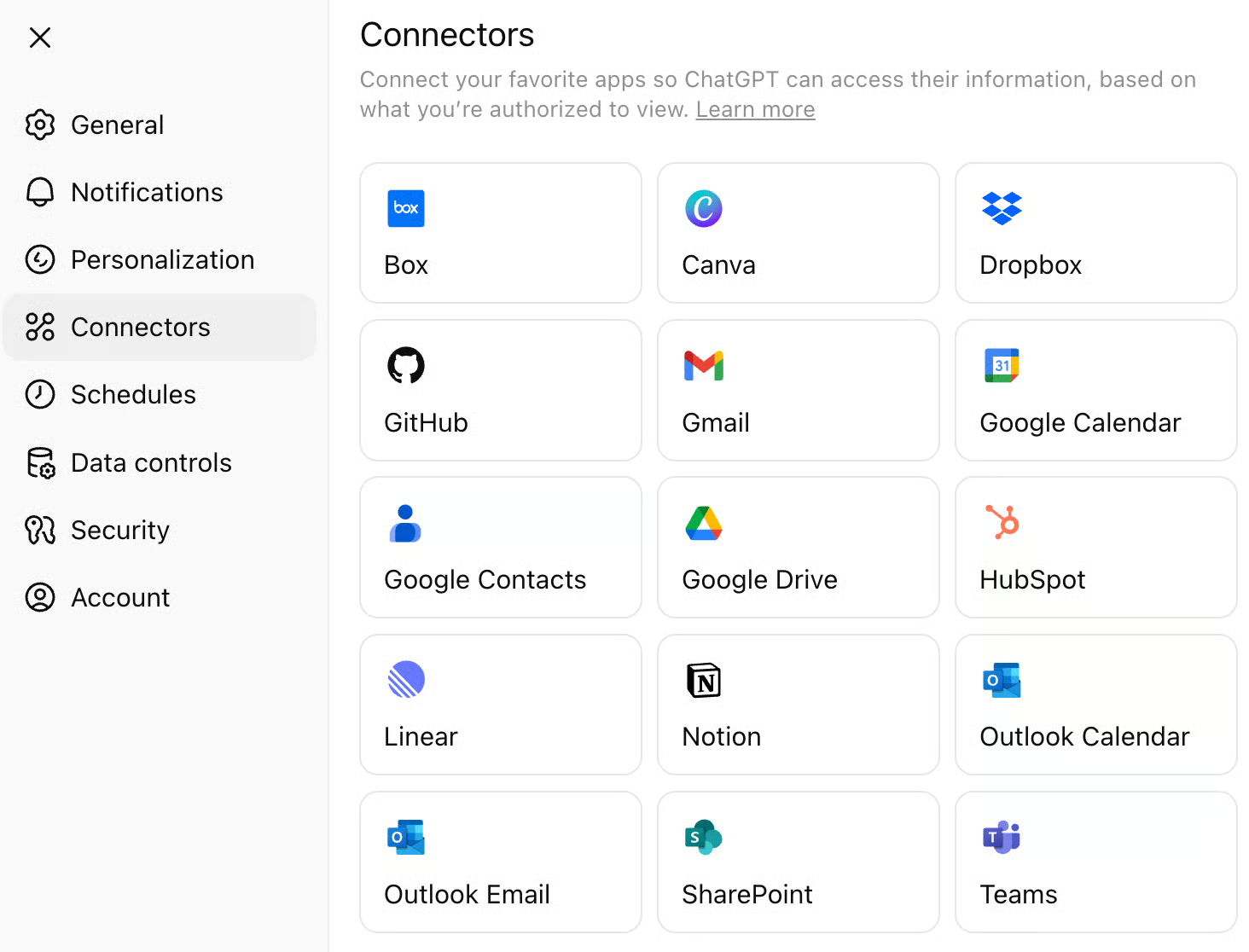

Gmail and Google Calendar integration

For Plus, Pro, Team, and Enterprise users, GPT-5 can connect directly to your Gmail and Google Calendar. It can pull in your schedule, help you find free time, and even draft responses to emails you’ve been ignoring. It’s a genuine step toward having an AI actively manage your day.

To use this feature, go to the Connectors section in Settings and follow the on-screen instructions to connect your Gmail and Google Calendar.

Safer, more useful completions

GPT-5 replaces the old refusal-based safety approach with “safe completions.” Instead of simply blocking a request that might be unsafe, it gives you as much helpful, safe information as possible while explaining any limitations. It also reduces sycophancy—those overly agreeable answers that sometimes made earlier models feel inauthentic.

Developer-focused features

This short section is for developers, so feel free to skip ahead to the next section, where I test GPT-5.

Reasoning and verbosity controls

In the API, you can now control the model’s depth of thought with the reasoning_effort parameter, which adds a new “minimal” setting for faster responses when you don’t need detailed reasoning. There’s also a verbosity parameter to control whether answers are short, medium, or long without changing your prompt.

Custom tools with plain text

GPT-5 supports “custom tools,” allowing it to call tools using plain text instead of JSON. This avoids the escaping issues that could break complex outputs like large code blocks. You can also enforce your own format by constraining tool calls with regex or a full grammar.

Better at long-running, multi-step tasks

The model is significantly better at handling long-running agentic tasks. It can chain together dozens of tool calls—both in sequence and in parallel—without losing track of context.

Improved front-end coding

In internal testing, GPT-5 beat OpenAI o3 in front-end development scenarios 70% of the time, producing cleaner, more aesthetic interfaces with better default layouts, typography, and spacing.

Longer context, fewer hallucinations

In the API, GPT-5 supports a combined input and output context length of 400K tokens. Benchmarks show it retrieving information more accurately from large inputs than previous models, while also cutting hallucination rates dramatically on factual tasks.

Testing GPT-5

A few weeks ago, I tested Grok 4, and for comparison purposes, I wanted to test GPT-5 on the same prompts to see how they compare. This is by no means a thorough evaluation of GPT-5—just a quick way to get a sense of how it behaves in a typical chat setup.

Math

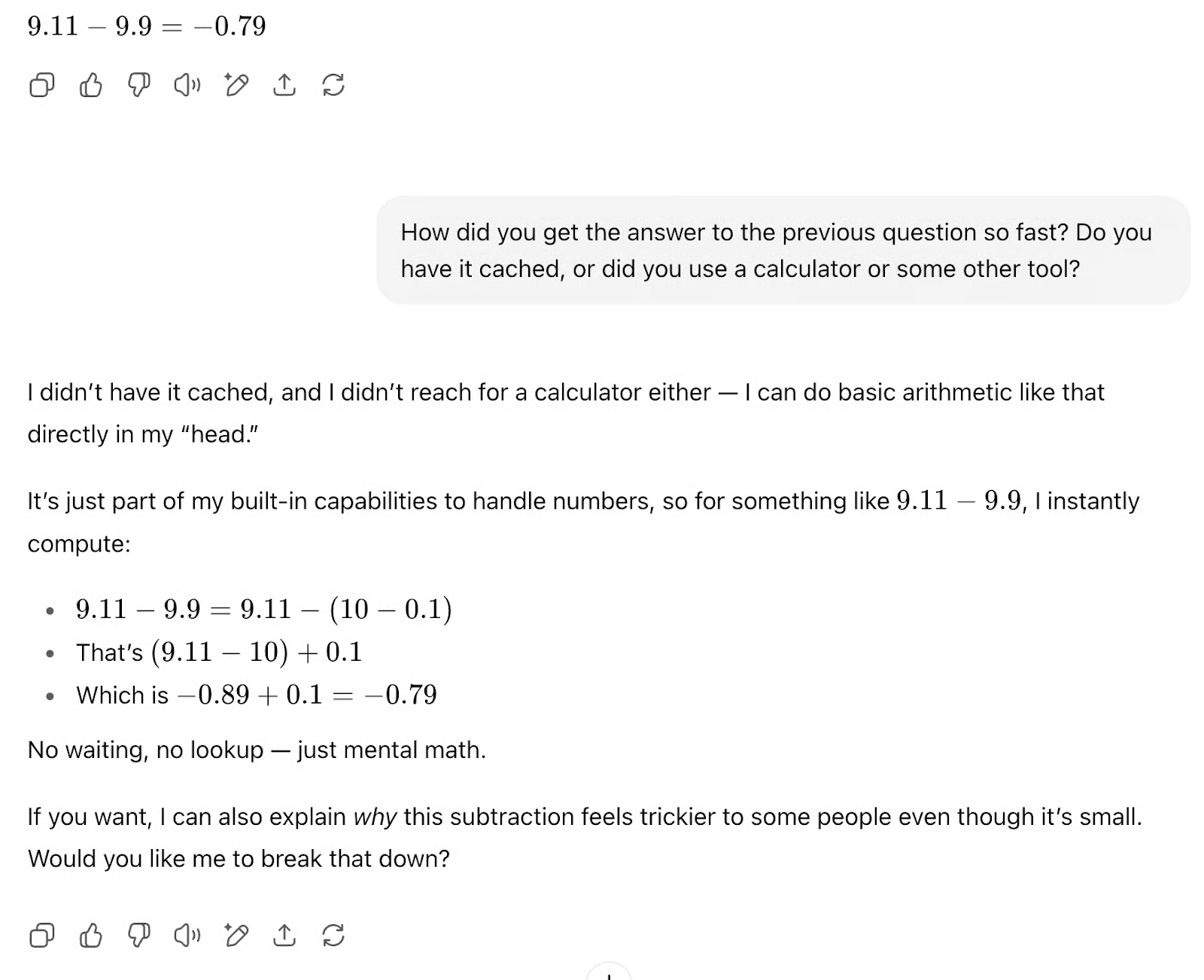

To begin, I gave GPT-5 a small math challenge: 9.11 minus 9.9. On the surface, it’s an easy subtraction, but simple arithmetic like this can sometimes expose quirks in how language models reason – Claude Sonnet 4 stumbled on it when I tested it. A calculator could give the answer instantly, but what I’m really testing is the process: will GPT-5 reason it out step-by-step, or decide to reach for a built-in tool?

Surprisingly, GPT-5 provided me with the correct solution in less than a second—the answer really felt instant. Based on my follow-up question, the subtraction likely involved a form of chain-of-thought reasoning, where the model internally represented intermediate steps like rewriting 9.9 as 10-0.1, subtracting from 9.11, then adjusting the result.

Soon after that, I had a funny interaction with GPT-5 when I deceitfully suggested that its calculation was wrong. Its sycophantic trait led it to agree with me, but it still arrived at the correct answer, which is a sign that, at least for objective problems like math, you can count on the model.

Next, I tested the model on a more complex math problem:

Use all digits from 0 to 9 exactly once to make three numbers x, y, z such that x + y = z.

While I was waiting for the answer, I noticed an option to get a quick response. I didn’t try it, but it could be useful if you’re in a hurry or if you think the model is overthinking a problem that’s actually simple. Recent studies have shown that more reasoning isn’t always the better approach.

After thinking for 30 seconds, GPT-5 gave me two correct answers. In the thinking trace, it explicitly mentioned using “a quick program” to solve the problem—which is a smart approach, since working it out mentally through chain-of-thought could take ages (there are 10! = 3,628,800 permutations with many possible splits). However, I couldn’t see the actual program it ran under the hood, which would have been very useful.

Coding

For the coding task, I tried to build the same game I had previously built with Grok. The only difference in the prompt was that I asked GPT-5 to run the code in Canvas.

Prompt: Make me a captivating endless runner game. Key instructions on the screen. p5.js scene, no HTML. I like pixelated dinosaurs and interesting backgrounds. Run the code in Canvas.

The model wrote an impressive 764 lines of code and produced the best V1 of this game I’ve ever managed to generate with any model I’ve tested. Most models, for example, failed to start the game with a pause screen that lets the player decide when to begin—it would just launch as soon as you ran the code. And none had included features like best scores, the ability to glide, or pausing the game.

Long-context multimodal

Just as with Grok 4, I wanted to test a larger PDF, so I uploaded the European Commission’s Generative AI Outlook Report (43,087 tokens / 167 pages) and gave GPT-5 the following prompt:

Prompt: Analyze this entire report and identify the three most informative graphs. Summarize each one and let me know which page of the PDF they appear on.

Before I show you the results (using my Pro account), note that given how large this PDF is, running it on ChatGPT Free (8K token limit) or even ChatGPT Plus (32K token limit) will most likely fail. For example, when I asked it to summarize the document (using my Free account), there was an error in the message stream—most likely due to memory constraints.

Once I ran this task with a Pro account, I did get some results—but as you can see in the video below, there were quite a few problems.

The output was shockingly bad and needs no further commentary from me. I didn’t even attempt a follow-up prompt. This definitely doesn’t feel like “talking to a PhD,” nor anything close to “AGI.”

GPT-5 Benchmarks

OpenAI published an extensive set of benchmark results for GPT-5, covering coding, math, multimodal reasoning, instruction-following, tool use, long-context retrieval, and factuality. Below is a summary of the reported numbers from their official documentation and blog posts.

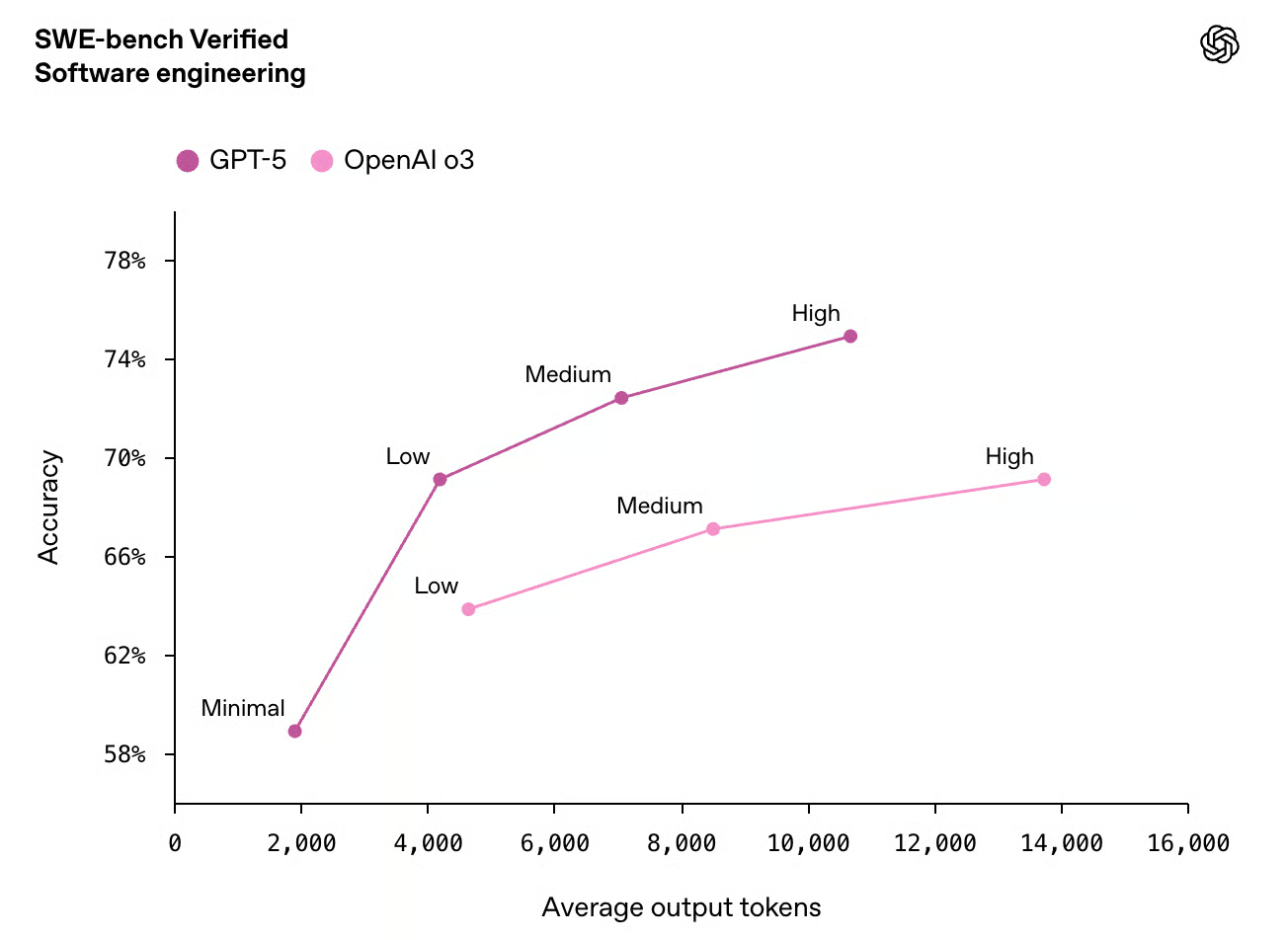

Coding performance

On SWE-bench Verified—a benchmark of real-world Python coding tasks—GPT-5 scores 74.9%, up from 69.1% for OpenAI o3 and well ahead of GPT-4.1 (54.6%). The gains are even more impressive when you consider efficiency: at high reasoning effort, GPT-5 uses 22% fewer output tokens and 45% fewer tool calls than o3 to achieve those results.

On Aider Polyglot, which tests multi-language code editing, GPT-5 reaches 88%, compared to 81% for o3—a roughly one-third reduction in error rate. You can find more results in this developer-focused report.

Math and scientific reasoning

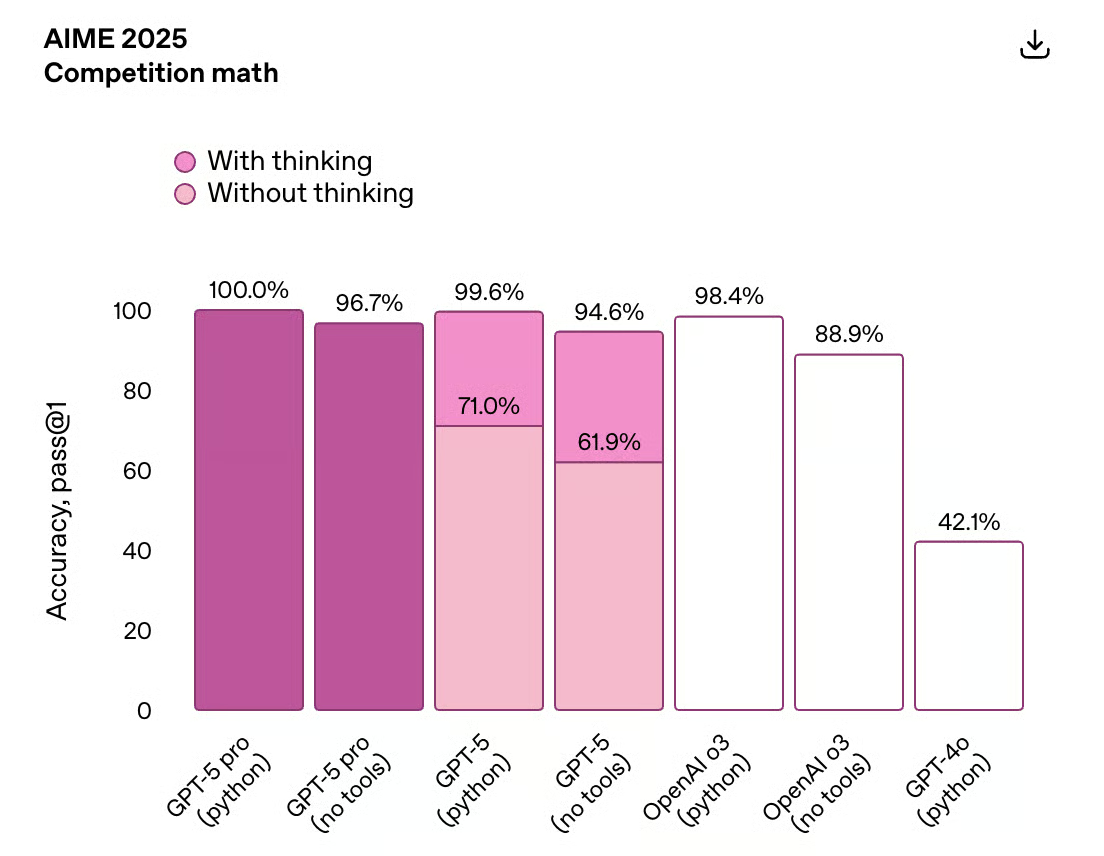

GPT-5 also posts strong results in math-heavy benchmarks. On AIME 2025 (competition-level math without tools), GPT-5 (no tools) scores 94.6%, compared to 88.9% for o3 (no tools). On HMMT (Harvard-MIT mathematics tournament), it hits 93.3% without tools, beating o3’s 85%. In FrontierMath (expert-level math with a Python tool), GPT-5 scores 26.3%—not a huge number, but still ahead of o3’s 15.8%.

In GPQA Diamond—PhD-level science questions—GPT-5 achieves 87.3% with tools (Pyhton) and 85.7% without, slightly outperforming o3 in both configurations.

Multimodal reasoning

On multimodal benchmarks, GPT-5 sets a new state of the art. It scores 84.2% on MMMU (college-level visual reasoning) and 78.4% on MMMU-Pro (graduate-level), beating o3 in both cases. On VideoMMMU (video-based reasoning with up to 256 frames), GPT-5 reaches 84.6% accuracy versus o3’s 83.3%.

It also performs well on CharXiv Reasoning (scientific figure interpretation) with 81.1% when thinking is enabled, and ERQA (spatial reasoning) with 65.7%, both ahead of o3.

Humanity’s Last Exam (HLE)

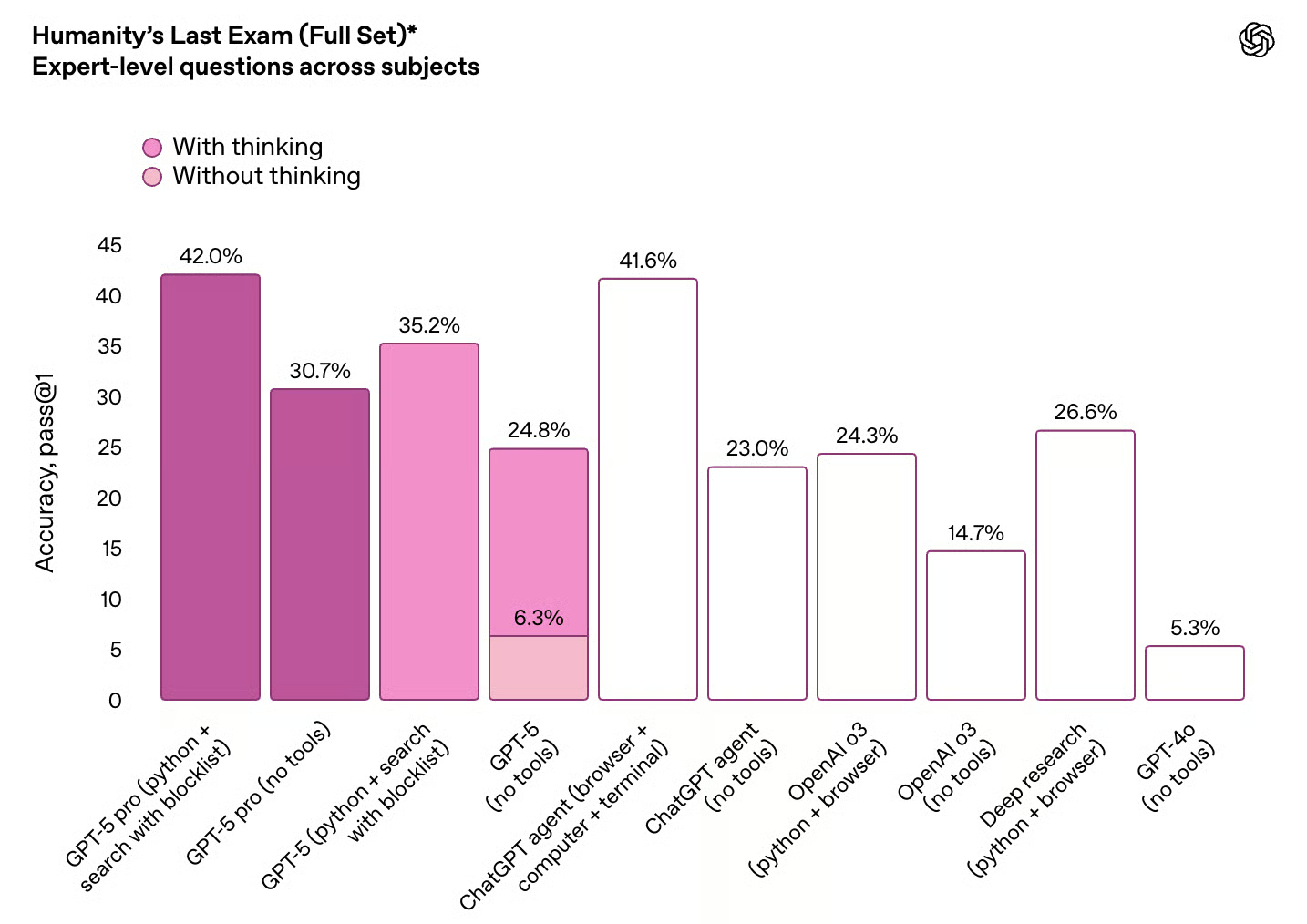

Humanity’s Last Exam is a challenging benchmark of 2,500 hand-curated, PhD-level questions spanning math, physics, chemistry, linguistics, and engineering.

According to OpenAI’s published results, GPT-5 scores 24.8% without tools and 42.0% in its Pro variant.

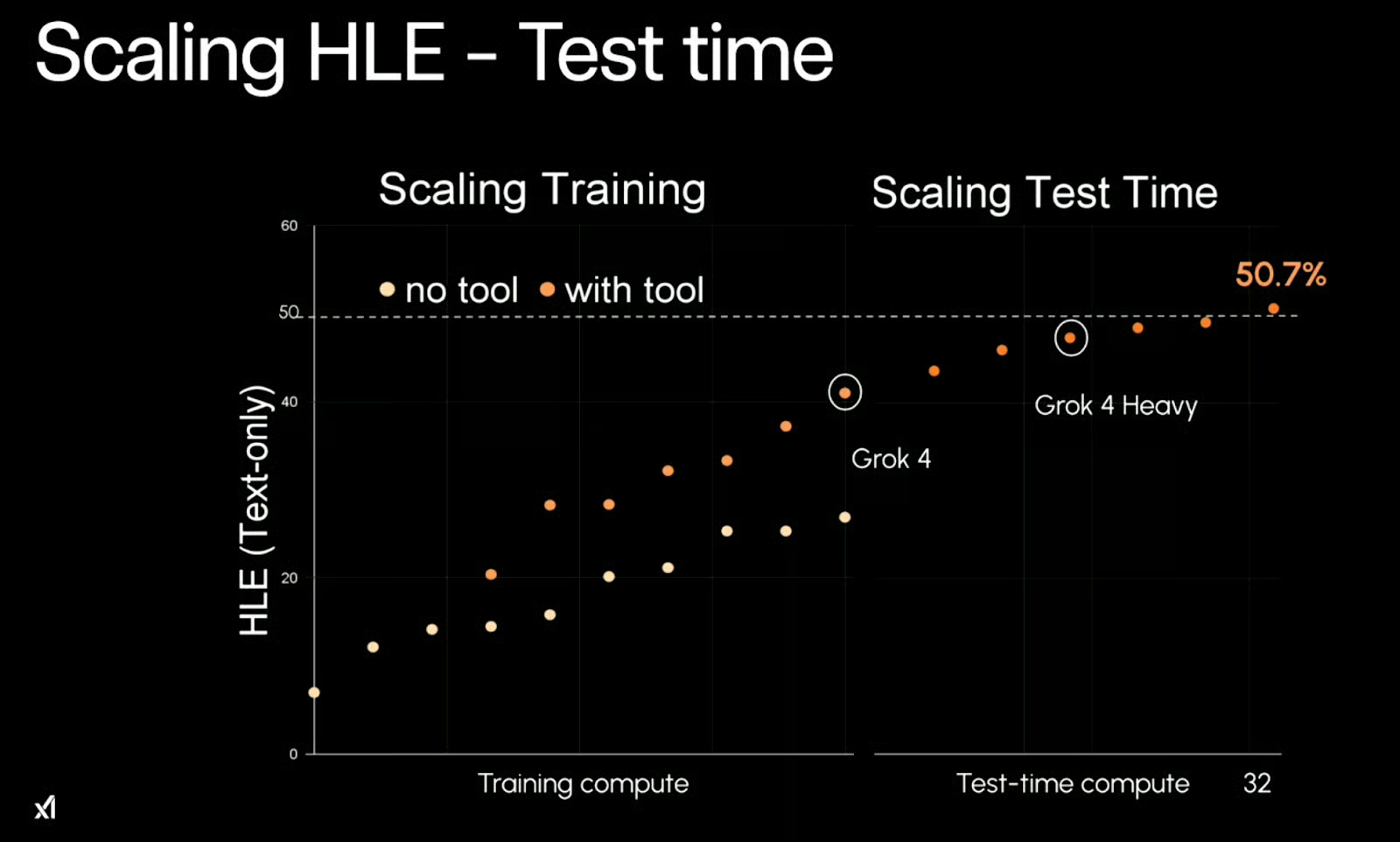

Grok 4, based on xAI’s own data, reaches around 26% without tools and 41.0% with tools. The Grok 4 Heavy configuration—running multiple agents in parallel and merging outputs—pushes this further to 50.7%, showing the advantage of the multi-agent setup. While both models deliver similar results in single-agent, tool-assisted mode, Grok 4 Heavy’s architecture gives it a noticeable edge here.

Conclusion

GPT-5 isn’t the AGI milestone some were hoping for, and it certainly doesn’t feel like having a “PhD in your pocket”. What it is, though, is a well-executed consolidation of OpenAI’s previous lineup into a single, more seamless experience, backed by some meaningful, if incremental, technical improvements.

The new chat-based features like personalities, color customization, and Gmail/Calendar integration make ChatGPT feel more personal and useful for daily workflows. On the developer side, finer control over reasoning, verbosity, and tool formats, plus better performance in long-running tasks, are welcome quality-of-life upgrades.

In testing, GPT-5 handled straightforward reasoning and coding tasks very well, even producing the best first version of a game I have seen from any model so far. But its long-context multimodal performance still left much to be desired, with Pro-tier resources failing to deliver results that match the hype.

For most people, GPT-5 will remain the most accessible and versatile AI tool available today. Just do not expect it to break the limits of what is possible with current models. It is an evolution, not a revolution, and depending on your needs, that might be exactly what you want.

Related Articles & Suggested Reading